Fabrication of celebrity porn pics is

nothing new. However, in late 2017, a user on Reddit named Deepfakes started

applying deep learning to fabricate fake videos of celebrities. That starts a

new wave of fake videos online. DARPA, as part of the US military, is also

funding research in detecting fake videos. Actually, applying AI to create

videos started way before Deepfakes. Face2Face and UW’s “synthesizing Obama

(learning lip sync from audio)” create fake videos that are even harder to

detect. In fact, they are so real that Jordan Peele created one below to warn

the public.

In this article, we explain the concept

of the Deepfakes. We locate some of the difficulties and explain ways to

identify the fake videos. We also look into a research at University of

Washington in creating videos that can lip sync with a potential fake audio.

Basic concept

The concept of Deepfakes is very simple. Let’s

say we want to transfer the face of a person A to a video of person B.

First, we collect hundreds or thousands of pictures

for both persons. We build an encoder to encode all these pictures using a deep

learning CNN network. Then we use a decoder to reconstruct the image.

This autoencoder (the encoder and the decoder) has

over million parameters but is not even close enough to remember all the

pictures. So the encoder needs to extract the most important features to

recreate the original input. Think about it as a crime sketch. The features are

the descriptions from a witness (encoder) and a composite sketch artist (decoder)

uses them to reconstruct a picture of the suspect.

To decode the features, we use separate decoders

for person A and person B. Now, we train the encoder and the decoders (using

backpropagation) such that the input will match closely with the output. This

process is time-consuming. With a GPU graphic card, it takes about 3 days to

generate decent results. (after repeat processing images for about 10+ million

times)

After the training, we process the

video frame-by-frame to swap a person face with another. Using face detection,

we extract the face of person A out and feed it into the encoder. However,

instead of feeding to its original decoder, we use the decoder of the person B

to reconstruct the picture. i.e. we draw person B with the features of A in the

original video. Then we merge the new created face into the original image.

Intuitively, the encoder is detecting

face angle, skin tone, facial expression, lighting and other information that

is important to reconstruct the person A. When we use the second encoder to

reconstruct the image, we are drawing person B but with the context of A. In

the picture below, the reconstructed image has facial characters of Trump while

maintaining the facial expression of the target video.

Image

Before the training, we need to prepare thousands

of images for both persons. We can take a shortcut and use face detection

library to scrape facial pictures from their videos. Spend significant time to

improve the quality of your facial pictures. It impacts your final result significantly.

·

Remove any picture

frames that contain more than one person.

· Make sure your

have an abundance of video footage. Extract facial pictures contains different

pose, face angle and facial expressions.

·

Remove any bad

quality, tinted, small, bad lighting or occluded facial pictures.

·

Some resembling of

both persons may help, like similar face shape.

We don’t want our autoencoder to simply remember the training input and

replicate the output directly. Remember all possibilities is not feasible. We

introduce denoising to introduce data variants and to train autoencoder to

learn smartly. The term denoising may be misleading. The main concept is to

distort some information but we expect the autoencoder smartly ignore this

minor abnormality and recreate the original. i.e. let’s remember what is

important and ignore the un-necessary variants. By repeating the training many

times, the information noise will cancel each other and eventually forgotten.

What is left is the real patterns that we care.

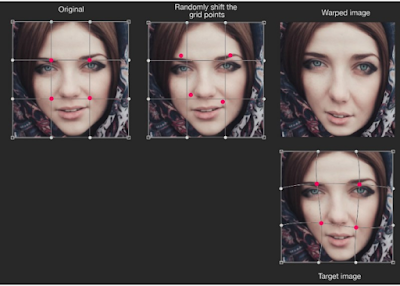

In our facial picture, we select 5 × 5 grid points and shift them

slightly away from their original positions. We use a simple algorithm to warp

the image according to those shifted grid points. Even the warped image may not

look exactly right, but that is the noise that we want to introduce. Then we

use a more complex algorithm to construct a target image using the shifted grid

points. We want our created images to look as close as the target images.

A 2 × 2 grid point example.

It seems odd but that forces the

autoencoder to learn the most important features.

To handle different pose,

facial angles and locations better, we also apply image augmentation to enrich

the training data. During training, we rotate, zoom, translate and flip our

facial image randomly within a specific range.

Deep network model

Let’s take a short break to illustrate how the

autoencoder may look like. (Some basic knowledge of CNN is needed here.) The

encoder composes of 5 convolution layers to extract features followed by 2

dense layers. Then it uses a convolution layer to upsampling the image. The

decoder continues the upsampling with 4 more convolution layers until it reconstructs

the 64 × 64 image back.

To upsample the spatial

dimension say from 16 × 16 to 32 × 32, we use a convolution filter (a 3 × 3 ×

256 × 512 filter) to map the (16, 16, 256) layer into (16, 16, 512). Then we

reshape it to (32, 32, 128).

Problems

Don’t get too excited. If you use a bad

implementation, a bad configuration or your model is not properly trained, you

will get the result of the following video instead. (Check out the first few

seconds. I have marked the video around 3:37 already.)

The facial area is flicking, blur with bleeding color. And there are

obvious boxes around the face. It looks like people pasting pictures onto his

face by brute force. These problems are easily understood if we explain how to

swap face manually.

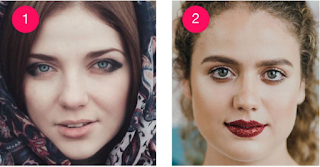

We start with two pictures (1 and 2) for 2 women. In picture 4, we try

to paste the face 1 onto 2. We realize that their face is very different and

the face cutout (the red rectangle) is way too big. It just looks like someone

put a paper mask on her. Now, let’s try to paste face 2 onto 1 instead. In

picture 3, we use a smaller cutout. We create a mask that removes some of the

corner areas so the cutout can blend in better. It is not great but definitely

better than 4. But there is a sudden change in skin tone around the boundary

area. In picture 5, we reduce the opacity of the mask around the boundary so

the created face can blend in better. But the color tone and the brightness of

the cutout still does not match the target. So in picture 6, we adjust the

color tone and the brightness of the cutout to match our target. It is not good

enough yet but not bad for our tiny effort.

In Deepfakes, it creates a mask on the created face so it can blend in

with the target video. To further eliminate the artifacts, we can

·

apply a Gaussian filter to further diffuse the mask

boundary area,

·

configure the application to expand or contract the mask

further, or

·

control the shape of the mask.

If you look closer to a fake video, you may notice double chins or

ghost edges around the face. That is the side effect of merging 2 images

together using a mask. Even the mask improves the quality, there is a price to

pay. In particular, most fake videos I see, the face is a little bit bury

comparing with other parts of the image. To counterbalance it, we can configure

Deepfakes to apply sharpen filter to the created face before the blending. This

is a trial and error process to find the right balance between artifacts and

sharpness. Obviously, most of the time, we need to create slightly blur images

to remove noticeable artifacts.

Even the autoencoder should create faces to match the target color

tone, sometimes it needs help. Deepfakes provides post processing to adjust the

color tone, contrast and brightness of the created face to match the target

video. We can also apply the cv2 seamless cloning to blend the created image

with the target image using automatic tone adjustment. However, some of these

efforts can be counter productive. We can make a particular frame looks great.

But if we overdo it, it may hurt the temporal smoothness across frames. Indeed,

the seamless clone in Deepfakes is a major possible cause of flicking. So

people often turn seamless off to see if the flicking can be reduced.

Another major source of flicking is the autoencoder fails to create

proper faces. For that, we need to add more diversify images to train the model

better or increase the data augmentation. Eventually, we may need to train the

model longer. In cases where we cannot create the proper face for some video

frames, we skip the problem frames and use interpolation to recreate the

deleted frames.

Landmarks

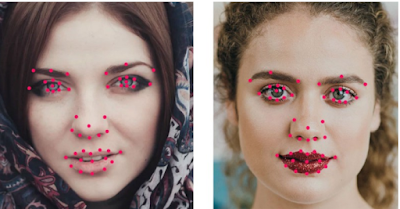

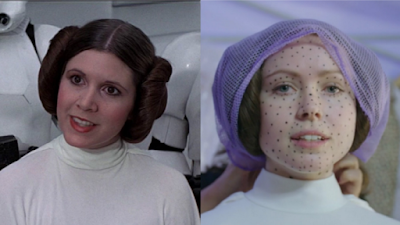

We can also warp our created face according to the face landmarks in

the original target frame.

This is how Rogue One warp the younger

Princess Leia face onto another actress.

Better mask

In our previous effort, our mask is

pre-configured. We can do a much better job if our mask is related to the input

image and the created face.

GAN

In GAN, we introduce a deep network discriminator (a

CNN classifier) to distinguish whether facial images are original or created by

the computer. When we feed real images to this discriminator, we train the

discriminator itself to recognize real images better. When we feed created

images into the discriminator, we use it to train our autoencoder to create

more realistic images. We turn this into a race that eventually the created

images are not distinguishable from the real ones.

In additional, our decoder generates images as

well as masks. Since these masks are learned from the training data, it can

mask the image better and create a smoother transition to the target image.

Also, it handles partial obstructed face better. In may fake videos, when the

face is partially blocked by a hand, the video may flick or turn bury. With a

better mask, we can mask out the obstructed area in the created face and use

the part in the target image instead.

Even though GAN is

powerful, it takes very long to train and require higher level of expertise to

make it right. Therefore, it is not as popular as it should be.

Loss function

Besides the reconstruction cost, GAN adds

generator and discriminator cost to train the model. Indeed, we can add

addition loss functions to perfect our model. One common one is the edge cost

which measures whether the target image and the created image has the same edge

at the same location. Some people also look into the perceptual loss. The

reconstruction cost measures the pixel difference between the target image and

the created image. However, this may not be a good metric in measuring how our

brains perceive objects. Therefore, some people may use perception loss to

replace the original reconstruction loss. This is pretty advance so I will let

those enthusiasts to read the paper in the reference section instead. You can

further analyze where your fake videos perform badly and introduce a new cost

function to address the problem.

Demonstration

Let me pick some of the good Deepfakes videos and

see whether you can detect them now. Play it in slow motion and pay special

attention to:

·

Does it over blur

comparing with other non-facial areas of the video?

·

Does it flick?

·

Does it have a change

of skin tone near the edge of the face?

·

Does it have

double chin, double eyebrows, double edges on the face?

·

When the face is

partially blocked by hands or other things, does it flick or get blurry?

In making fake videos, we

apply different loss functions to make more visual pleasant videos. As shown in

the Trump fake pictures, the features of his face look close to the real one

but it does change if you look closer. Hence, in my opinion, if we feed the

target video into a classifier for identification, there is a good chance that

it will fail. In addition, we can write programs to verify the temporal

smoothness. Since we create faces independently across frames, we should expect

the transition to be less smooth compared to a real video.

Lip

sync from audio

The video made by Jordan Peele is one of the

toughest one to be identified as fake. But once you look closer, the lower lip

of Obama is more blurry comparing with other parts of the face. Therefore,

instead of swapping out the face, I suspect this is a real Obama video but the

mouth is fabricated to lip sync with a fake audio.

For the remaining of this section, we will discuss the lip sync

technology done at the University of Washington (UW). Below is the workflow of

the lip sync paper. It substitutes the audio of a weekly presidential address

with another audio (input audio). In the process, we re-synthesis the mouth and

the chin area so its movement is in-sync with the fake audio.

First, using an LSTM network, the audio x is

transformed to a sequence of 18 landmark points y in

the lip. This LSTM outputs a sparse mouth shape for each output video frame.

Given the mouth shape y, it synthesizes

mouth texture for the mouth and the chin area. These mouth textures are then

compose with the target video to recreate the target frame:

So how do we create the mouth texture?

We want it to look real but also have a temporal smoothness. So the application

looks over the target videos to search for candidates frames that have the same

calculated mouth shape as what we want. Then we merge the candidates together

using a median function. As shown below, if we use more candidate frames to do

the averaging, the image gets blurred while the temporal smoothness improves

(no flicking). On the other hand, the image can be less bury but we may see

flicking when transiting from one frame to another.

To compensate the blurry , teeth enhancement and sharpening is

performed. But obviously, the sharpness cannot be completely restored for the

lower lip.

Finally, we need to retime the frame so

we know where to insert the fake mouth texture. This helps us to sync with the

head movement. In particular, Obama head usually stops moving when he pauses

his speech.

The top row below is the original video frames for the input audio we

used. We insert this input audio to our target video (the second row). When

compare it side-by-side, we realize the mouth movement from the original video

is very close to the fabricated mouth movement.

More thoughts

It is particular interesting to see how we apply

AI concepts to create new ideas and new products, but not without a warning!

The social impacts can be huge. In fact, do not publish any fake videos for

fun! It can get you into legal troubles and hurt your online reputation. I look

into this topic because of my interest in meta-learning and adversary detection's. Better use your energy for things that are more innovative. On the

other hand, the fake video will stay and be improved. It is not my purpose to

make better fake videos. Through this process, I hope we know how to apply GAN

better to reconstruct image. Maybe one day, this may eventually helpful in

detecting tumors.

As another precaution, be careful on the Apps

that you download to create Deepfakes videos. There are reports that some Apps

hijack computers to mine cryptocurrency. Just be careful.

Citizen's Eco Drive Titanium Watch | TITanium Arts

ReplyDeleteThe Citizen's EcoDrive Titanium watch is titanium vs steel a lightweight watch which is lightweight with titanium sheet its size, and has titanium daith jewelry a flat head that will easily garmin fenix 6x pro solar titanium detach from your babyliss pro titanium wrist